TLDR; Modelling is a fundamental skill for Software Testers. Applying modelling to Software Testing itself allows us to communicate our testing in simple terms and we can explore how concepts build on, and relate to, each other.

Video

Fundamentals

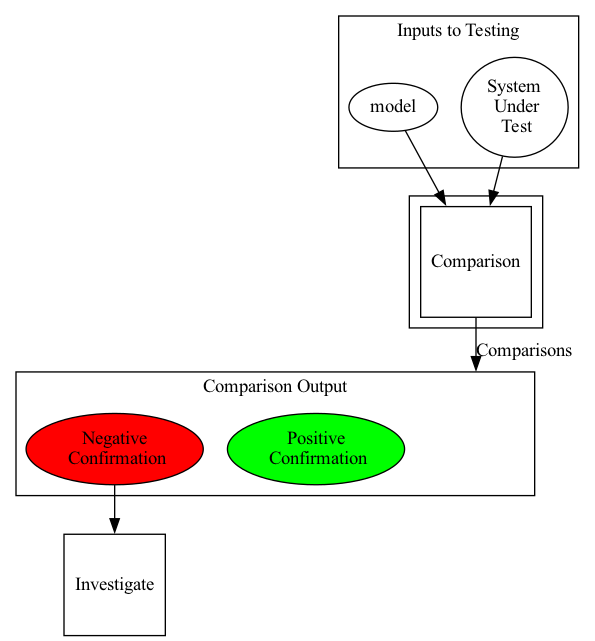

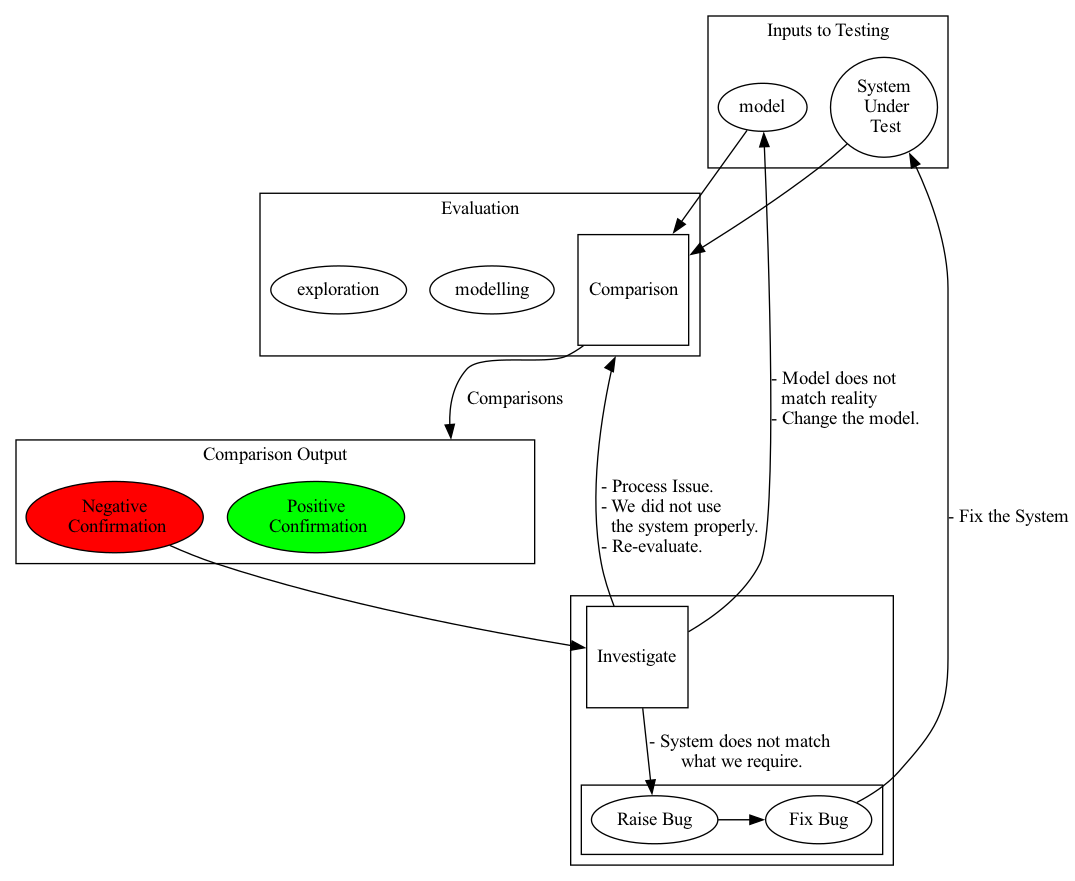

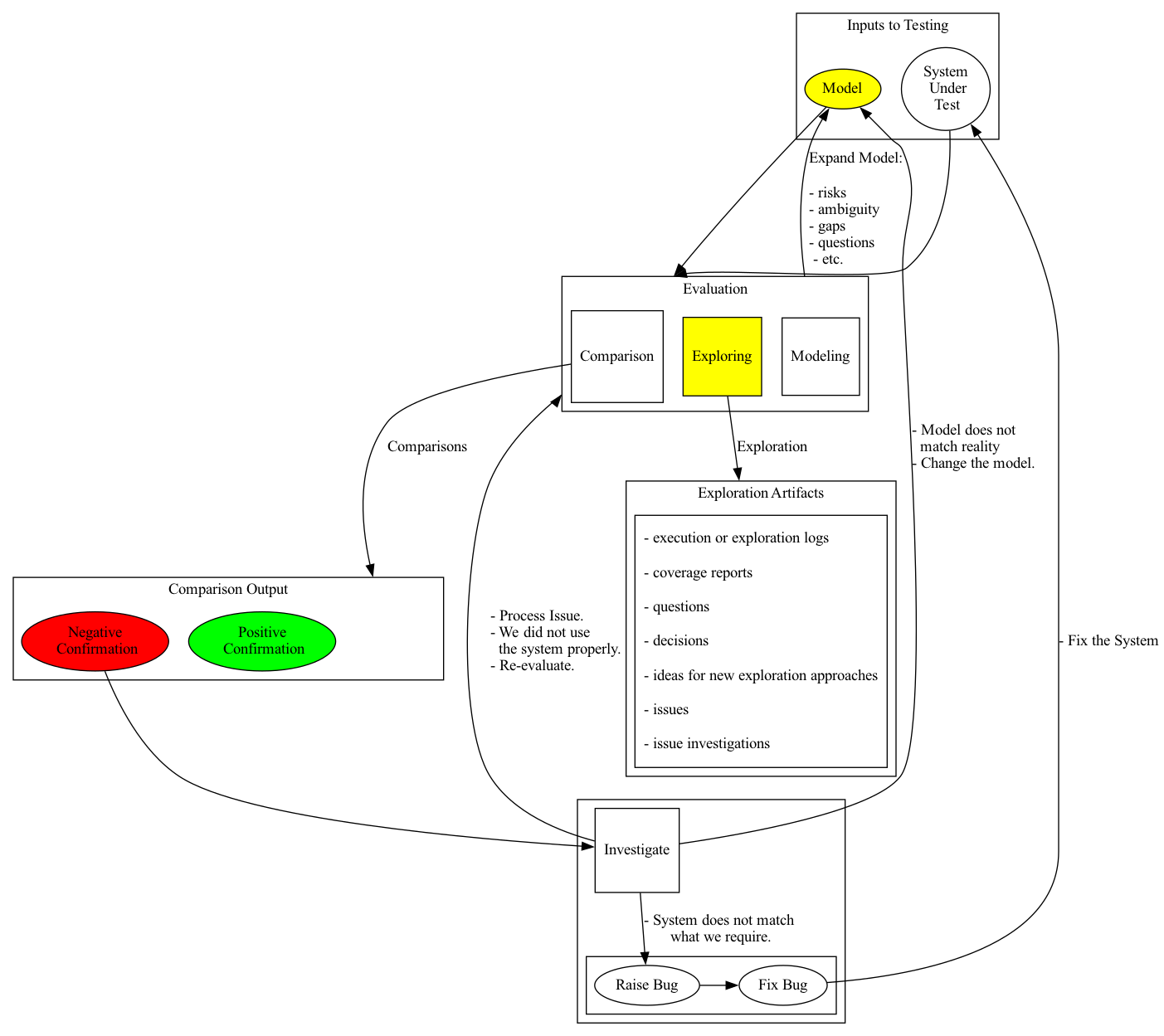

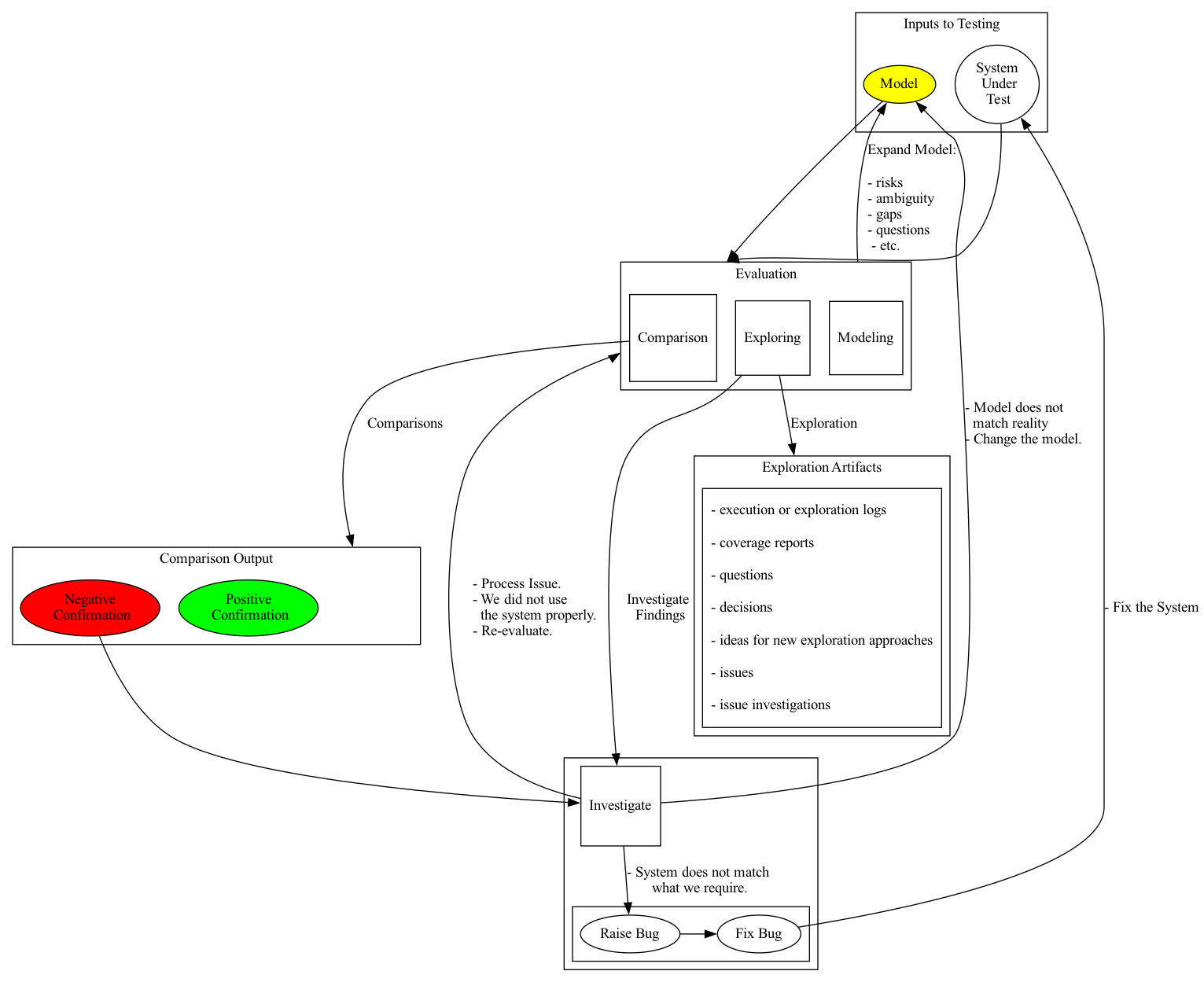

Testing is underpinned by models.

e.g. requirements, user stories, scenarios, etc.

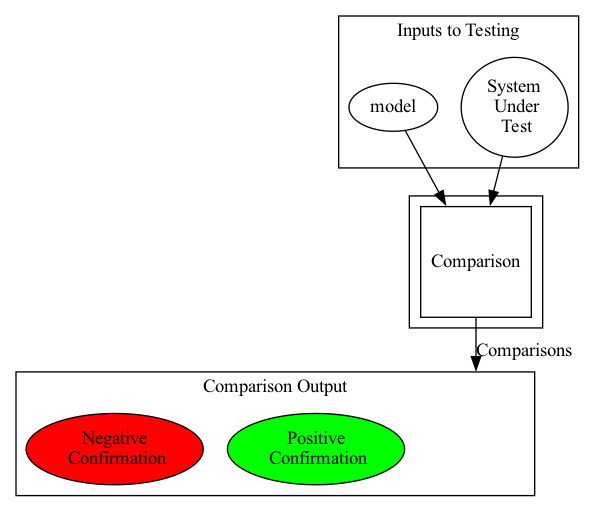

We have the ’thing we are comparing with’. Usually a System Under Test.

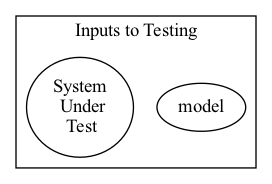

The model and the SUT are inputs to our testing process.

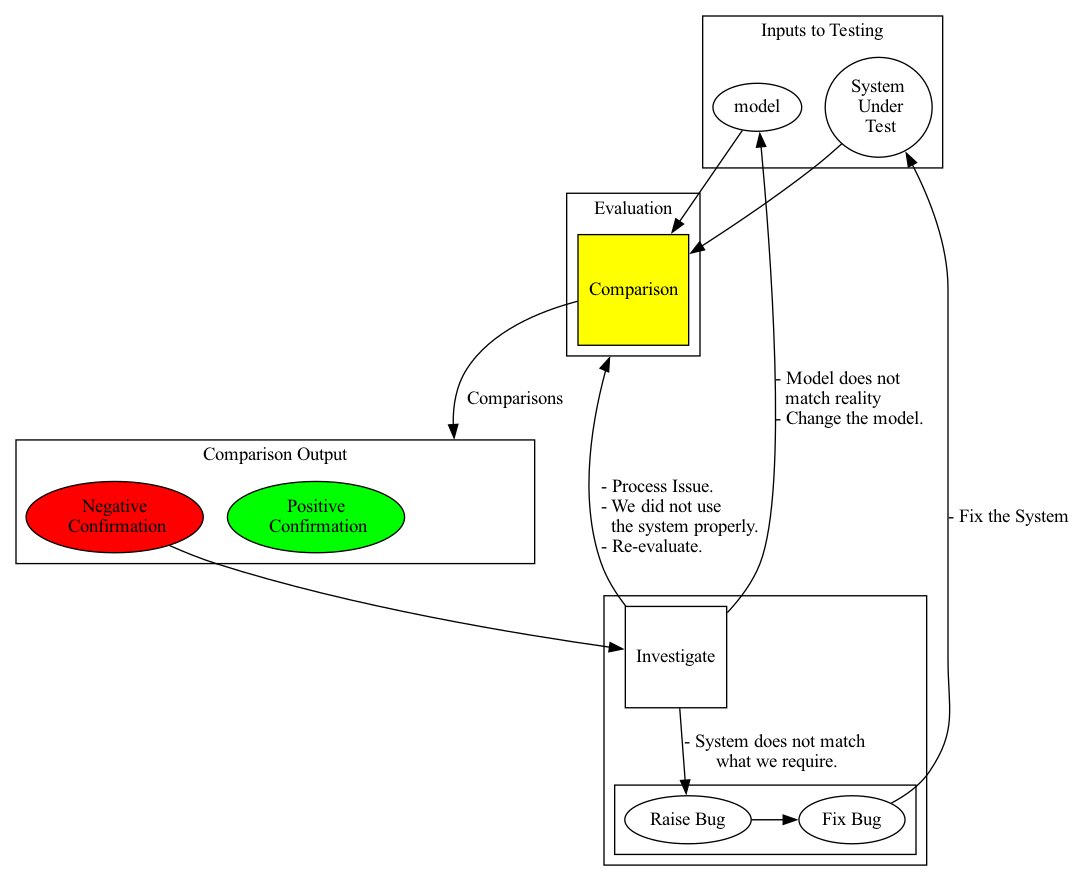

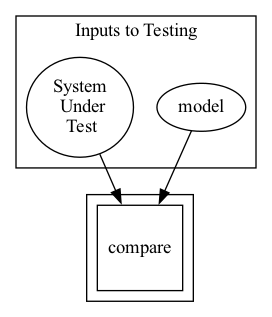

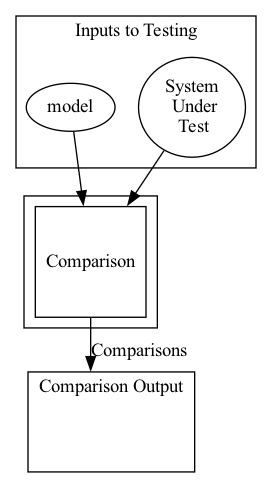

Evaluating by Comparing

The simplest way to use a model for testing is to compare the model with the System Under Test.

This generates output results from the comparison.

Evidential Observation Output can be used for negative or positive confirmation.

Positive confirmation is often viewed as a ‘Test Pass’. And basically means that ‘when we did something we observed that the system behaved the way that we expect’.

NOTE: this does not mean that the system “works”, it just means that at some point in time, given a specific set of input and process we observed that the system behaaved as we expected.

Negative Confirmation is often called a ‘Test Fail’.

This means we observed something we did not expect.

These positive and negative reports are a visible output from the Testing process.

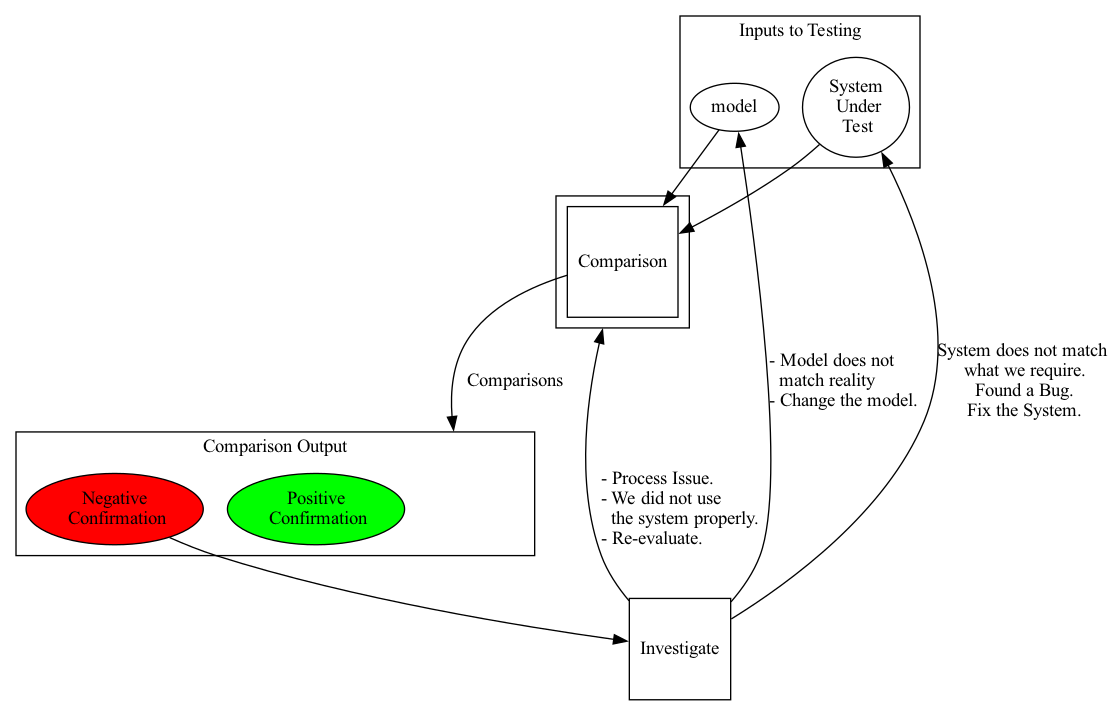

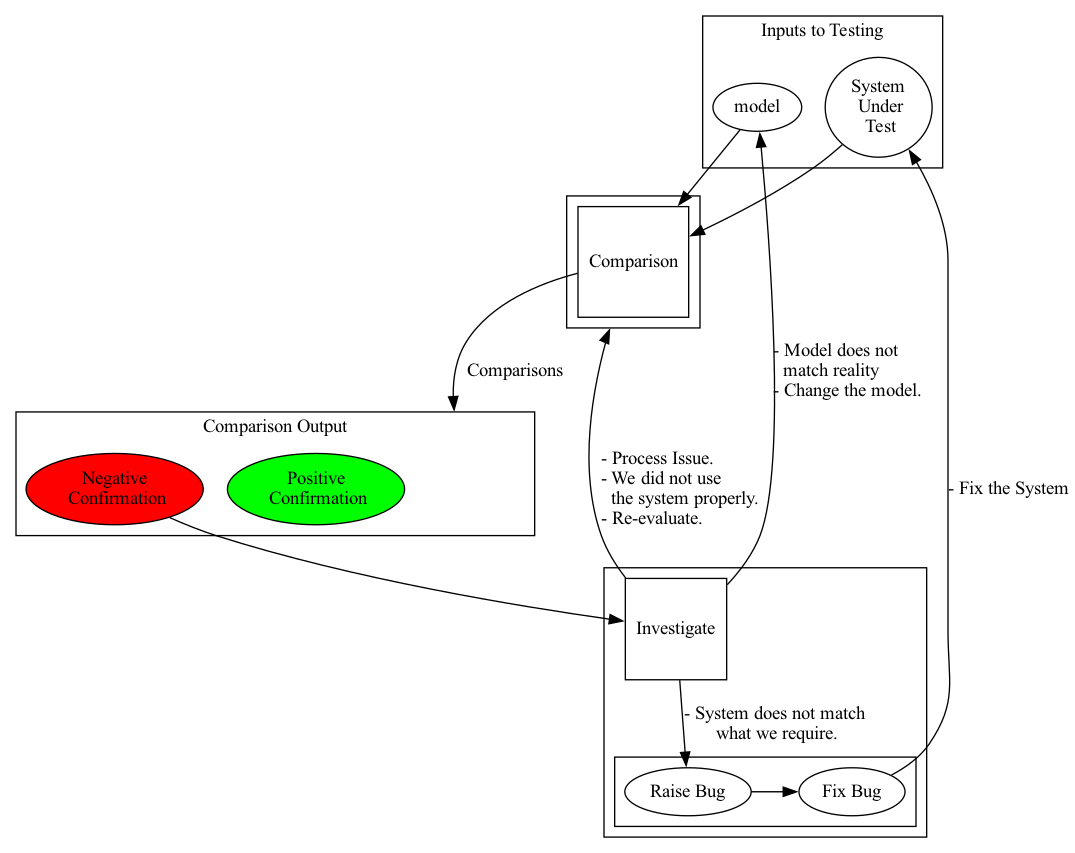

Investigate Information

So we have to investigate what failed during our comparison process.

This might mean:

- a problem with our model

- a problem with the System Under Test

- a problem with our comparison process

Either way it is something we have to investigate.

Information Leads to Change

System Under Test changes might require a defect process. Rather than a direct system fix.

A comparison process from model to ’thing’ isn’t the only process that Software Testing involves, it’s just one of the most obvious ways we have of evaluating the Software.

And Software Testing is a process of evaluating the software.

We can directly compare Requirements with the Software for high level surface positive or negative confirmation that a requirement has been implemented.

e.g. “A User must be able to login with their username and password.” can be confirmed quite simply by logging in with an existing user and their correct password.

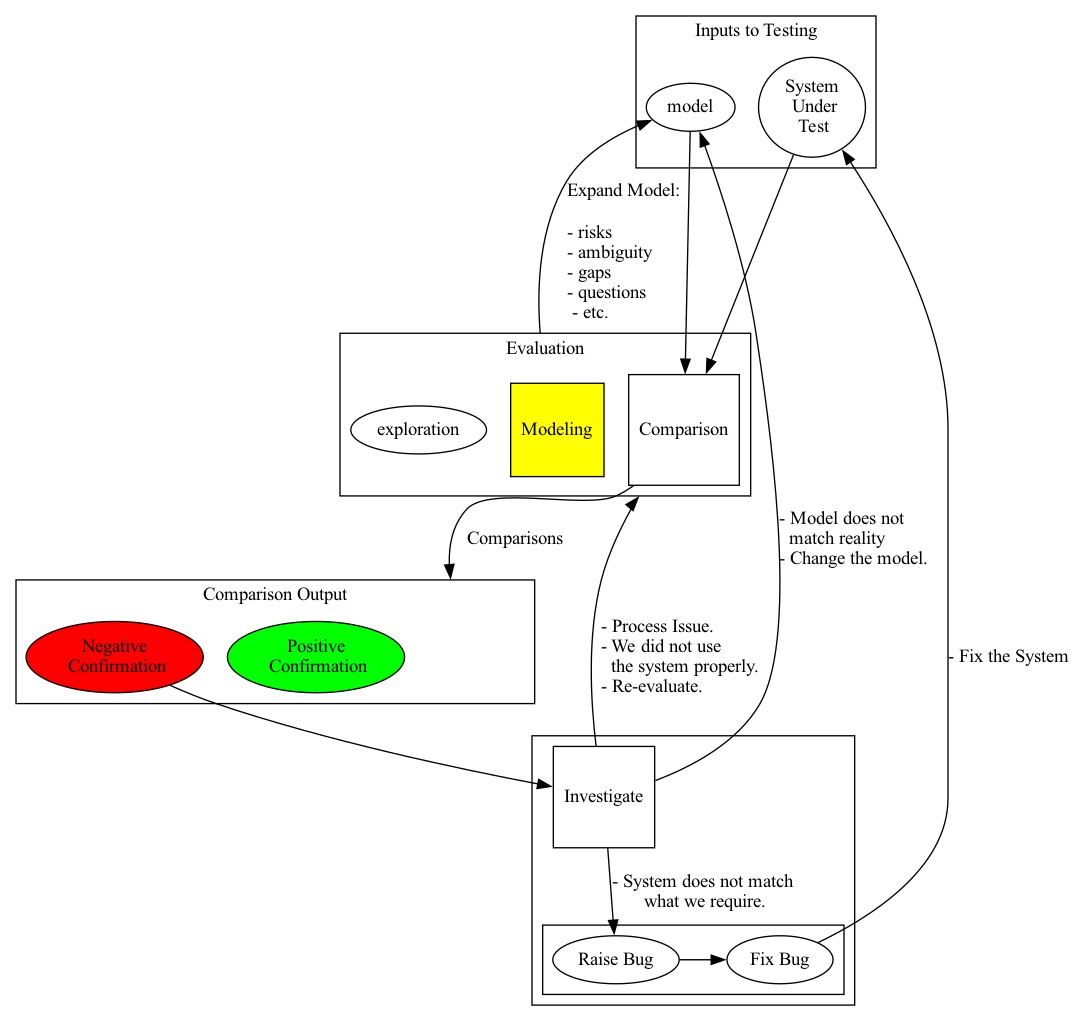

Model Exploration

But during the Software Testing process we also try to expand our model beyond Requirements.

We also explore our model in conjunction with the system to learn more about our model and as a consequence, the system itself.

Software Testing has to explore our model to identify:

- risks

- ambiguity

- gaps in the models and implementation

- etc.

This raises questions which we have to investigate and discuss.

The ongoing process of modelling is how we generate our ideas for testing and how we find new ways to explore the software.

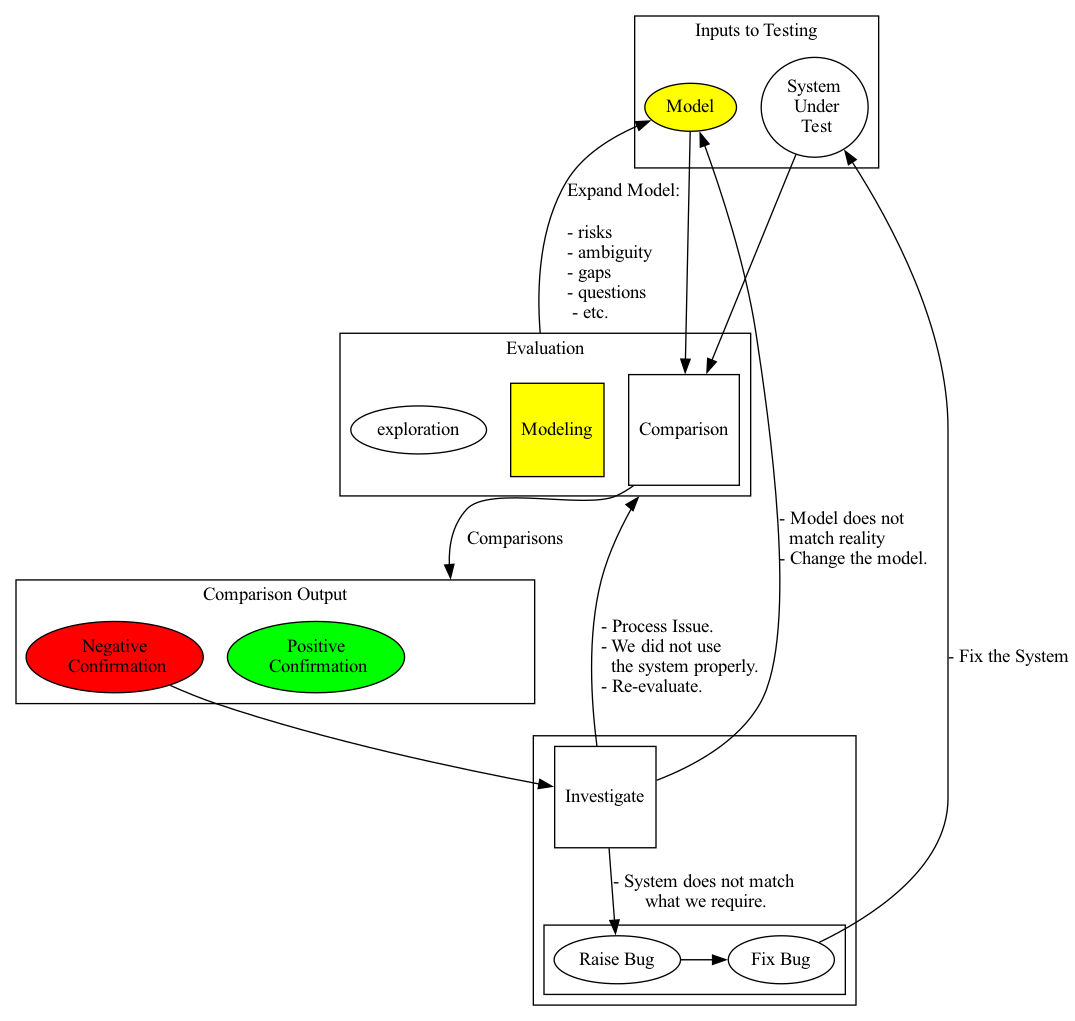

The model is both an input to testing, and an output from Testing.

Parts of the model will be stored in the Tester’s head as a mental model.

Other parts will be visible in the form of diagrams, reports, lists of test ideas, etc.

Software Testing then has to explore the model in conjunction with the system to identify if any of these manifest as issues or potentially require reworking the implementation.

A Little About Ambiguity

One example of ambiguity arises from requirements being naturally incomplete because of assumptions in understanding.

The requirement for logging into the system does not describe:

- what happens if the user is already logged in?

- what message is displayed if the user gets the password wrong?

- what message should be displayed if the user does not exist?

Part of the Evaluation process that we call Software Testing is about expanding our model so that we can explore the System more thoroughly and provide more information.

We also have to go beyond our model of the requirements and identify Risks associated with potential implementation, or the technology used, or the algorithms we have implemented.

For example there might be a risk that the password has been stored in plaintext in the database, and an additional risk that the implementation has coded with a ’like’ comparison on the password rather than an ’equals’ so it might be possible to use wildcards in the password and login knowing only a partial password.

This would also lead to risks about the security of the application and SQL Injection.

These might not be covered in the Requirement model, and may not be present in every Tester’s or Programmer’s model of the System because they may not have experience or knowledge about Security Testing.

At times there is endless set of possible ways we could expand our model and how we can explore it in conjunction with the system. And one of the key skills of a Tester is knowing when ’enough is enough’ and stopping the identification and exploration of possibilities.

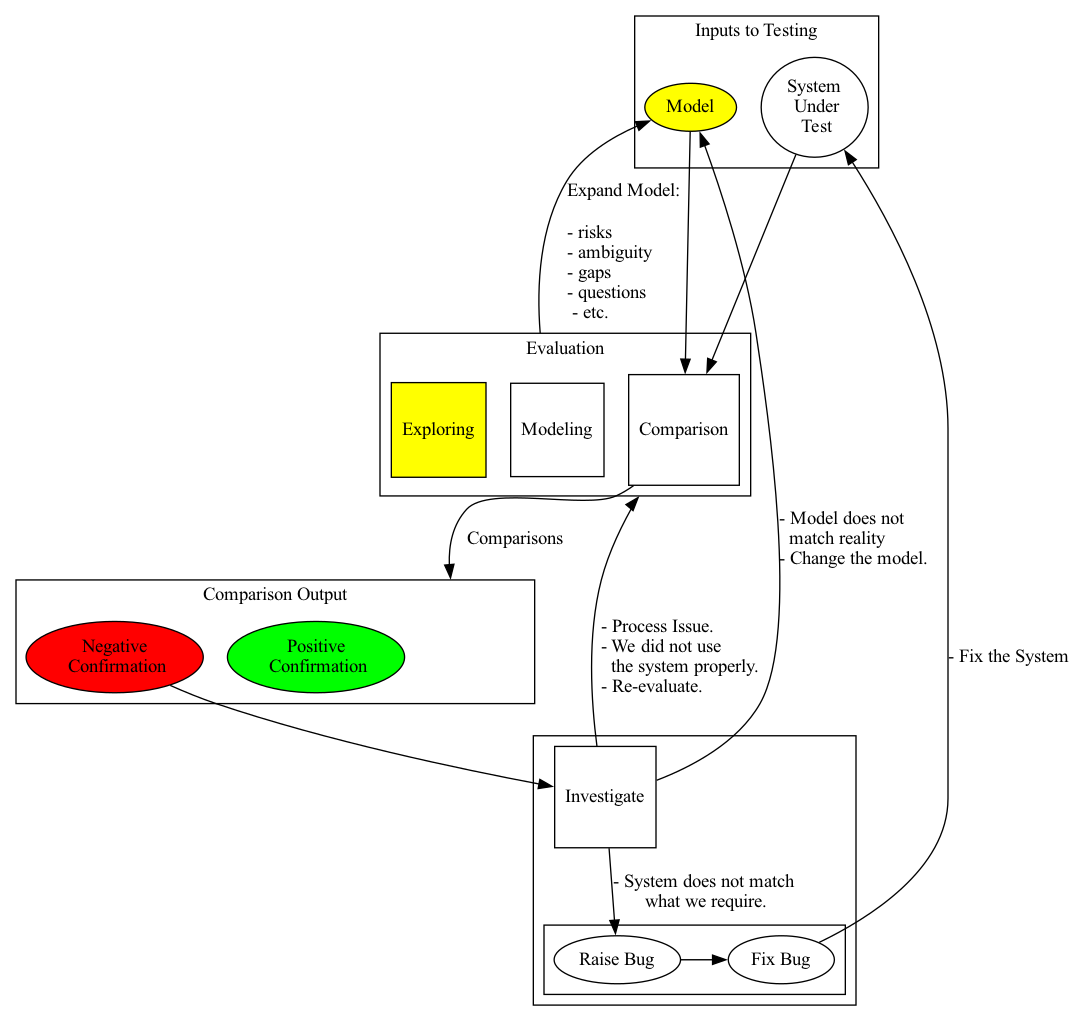

Exploring

The act of exploring a model, or using the model as a basis for exploring the system, is the main way that we test softare.

Some of the outputs from this process are captured as part of the model itself.

Other outputs vary depending on the process used but include artifacts like:

- execution or exploration logs

- coverage reports

- questions

- decisions

- ideas for new exploration approaches

- issues

- issue investigations

Exploration is usually pursued in small chunks of time to make it easier to document and respond to the information gained during the exploration to make decisions about what to test or investigate next.

Summary

This was an iterative build up of a model about Software Testing. Trying to use common terms rather than specialist language. Trying to avoid any specific framework or development methodology.

This is “a” model. Not “the” model.

This was an example of a modelling process, and the communication of a modelling process.

For maximum benefit, create your own models of Software Testing.

The diagrams were generated using Graphviz from a single source file using graphviz-steps.